In this article, we’ll see how to use Generalized Hough Transform with OpenCV to do shape based matching.

This algorithm takes points along the contours of an object with the help of Canny filter. Then, for each point, the gradient orientation is calculated from two Sobel filters, one horizontal and one vertical. This data is placed in a R-Table, which is used to find similar features in an image.

In order to test it, we’ll use a project we talked about in an other post, where we produced images of fine sheet metal parts for a bin picking application.

https://vivienmetayer.com/en/using-blender-to-simulate-images-a-the-beginning-of-a-vision-project/

The code is available on Github :

https://github.com/vivienmetayer/Generalized_Hough_OpenCV_sample/blob/master/Geometric_matching.cpp

OpenCV 4.3 is used, build with CUDA support to get the GPU version of the algorithm, which is much faster.

#include <iostream> #include "opencv2/opencv.hpp" #include "opencv2/cudaimgproc.hpp" using namespace std; using namespace cv; const bool use_gpu = true; int main() { Mat img_template = imread("template.png", IMREAD_GRAYSCALE); Mat img = imread("image.png", IMREAD_GRAYSCALE);

We start by reading the image that contains the parts to detect, and the template. It is a single part, correctly oriented and cropped.

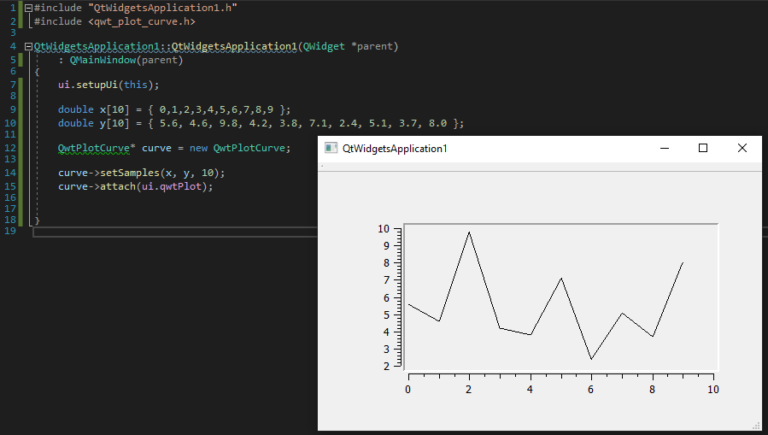

Ptr<GeneralizedHoughGuil> guil = use_gpu ? cuda::createGeneralizedHoughGuil() : createGeneralizedHoughGuil(); guil->setMinDist(100); guil->setLevels(360); guil->setDp(4); guil->setMaxBufferSize(4000); guil->setMinAngle(0); guil->setMaxAngle(360); guil->setAngleStep(1); guil->setAngleThresh(15000); guil->setMinScale(0.9); guil->setMaxScale(1.1); guil->setScaleStep(0.05); guil->setScaleThresh(1000); guil->setPosThresh(400); //guil->setCannyHighThresh(230); //guil->setCannyLowThresh(150);

Next we define the main algorithm settings.

- MinDist : minimum distance between two detected objects

- Levels : R-Table size (number of lines)

- Dp : ratio between image size and search grid size

- MaxBufferSize : maximum internal buffers size, if too low, the entire image cannot be scannedr

- PosThresh : number of votes to validate a match. This number depends on many other parameters, so it has to be often adjusted if the others are modified.

Canny filter settings can also be set, but we’ll be doing it manually, to be able to check if the resulting contours are the ones that are relevant to our problem.

double sobelScale = 0.05; int sobelKernelSize = 5; int cannyHigh = 230; int cannyLow = 150; Mat canny; Canny(img, canny, cannyHigh, cannyLow); namedWindow("canny", WINDOW_NORMAL); imshow("canny", canny); Mat dx; Sobel(img, dx, CV_32F, 1, 0, sobelKernelSize, sobelScale); namedWindow("dx", WINDOW_NORMAL); imshow("dx", dx); Mat dy; Sobel(img, dy, CV_32F, 0, 1, sobelKernelSize, sobelScale); namedWindow("dy", WINDOW_NORMAL); imshow("dy", dy); Mat canny_template; Canny(img_template, canny_template, cannyHigh, cannyLow); namedWindow("canny_template", WINDOW_NORMAL); imshow("canny_template", canny_template); Mat dx_template; Sobel(img_template, dx_template, CV_32F, 1, 0, sobelKernelSize, sobelScale); namedWindow("dx_template", WINDOW_NORMAL); imshow("dx_template", dx_template); Mat dy_template; Sobel(img_template, dy_template, CV_32F, 0, 1, sobelKernelSize, sobelScale); namedWindow("dy_template", WINDOW_NORMAL); imshow("dy_template", dy_template);

We apply the filters settings and visualize the resulting contours.

vector<Vec4f> position; TickMeter tm; Mat votes; if (use_gpu) { cuda::GpuMat d_template(img_template); cuda::GpuMat d_edges_template(canny_template); cuda::GpuMat d_image(img); cuda::GpuMat d_x(dx); cuda::GpuMat d_dx_template(dx_template); cuda::GpuMat d_y(dy); cuda::GpuMat d_dy_template(dy_template); cuda::GpuMat d_canny(canny); cuda::GpuMat d_position; cuda::GpuMat d_votes; guil->setTemplate(d_edges_template, d_dx_template, d_dy_template); tm.start(); guil->detect(d_canny, d_x, d_y, d_position, d_votes); if (d_position.size().height != 0) { d_position.download(position); d_votes.download(votes); } tm.stop(); } else { guil->setTemplate(canny_template, dx_template, dy_template); tm.start(); guil->detect(canny, dx, dy, position, votes); tm.stop(); } cout << "Found : " << position.size() << " objects" << endl; cout << "Detection time : " << tm.getTimeMilli() << " ms" << endl;

Finally, the template is given to the algorithm and the detection is launched. For both these functions, the overloaded version taking in the contours and gradient images is used.

To use the GPU version, first convert all of the images into GpuMat, we can get the results with the download function later.

All this is measured with the TickMeter to check the difference between GPU and CPU version. The advantage of using CUDA is confirmed. the GPU takes one second to do the matching, whereas the CPU take almost thirty minutes (measured with a Ryzen 5 3600 and GTX 1060 6Go).

Mat out; cvtColor(img, out, COLOR_GRAY2BGR); int index = 0; Mat votes_int = Mat(votes.rows, votes.cols, CV_32SC3, votes.data, votes.step); for (auto& i : position) { Point2f pos(i[0], i[1]); float scale = i[2]; float angle = i[3]; RotatedRect rect; rect.center = pos; rect.size = Size2f(img_template.cols * scale, img_template.rows * scale); rect.angle = angle; Point2f pts[4]; rect.points(pts); line(out, pts[0], pts[1], Scalar(0, 255, 0), 3); line(out, pts[1], pts[2], Scalar(0, 255, 0), 3); line(out, pts[2], pts[3], Scalar(0, 255, 0), 3); line(out, pts[3], pts[0], Scalar(0, 255, 0), 3); int score = votes_int.at<Vec3i>(index).val[0]; index++; string score_string = to_string(score); putText(out, score_string, rect.center, FONT_HERSHEY_SIMPLEX, 1.0, Scalar(0, 0, 255), 2); } namedWindow("out", WINDOW_NORMAL); imshow("out", out); waitKey();

Now we get the results and overlay them on the image.

the vote matrix is returned in the wrong format (float*4) in the GPU version, that’s why we have to transform it by copying the data in an integer matrix.

For each match, it’s coordinates and number of votes is displayed on the image with an oriented rectangle and a text. The rectangle represents the border of the template image used.